The problems with Python as a teaching language

Python was supposedly intended as an easy language intended for teaching. But there’s design decisions which really don’t work well for that purpose. Some of these have been fixed or changed in the latest version of Python, so this criticism applies primarily to Python 2.7, but some things are still the same. Here’s some ways it confuses students:

- The special-case of print vs normal functions. Teaching people to write things like print "Hello World!" seems easy at first. And print lets you comma-separate an unlimited number of parameters like so: print "Hello", name, "welcome aboard!". But this kind of syntax is unique to print, which makes it extra confusing when the student tries to apply the same kind of string manipulation to other cases. I’ve seen numerous examples similar to this: input("Hello", name, "please enter a number:") which does not work because input is a normal function and only expects a single parameter. The print syntax teaches students to use commas to join strings, but that is not the correct way to join strings. Also, print forces a newline unless you do the ridiculous looking syntax of print "No newline", which is really strange even to me.

- Excessive operator overloading. Most people, for whatever reason, seem to be able to handle the idea that + is used for arithmetic and string joining. But the usage of % for either modulo or format-substitution is endlessly confusing. Perhaps it’s because the idea of using operations such as modulo and format-substitution are new to the students. But for people new to programming, they don’t necessarily see the lexical differences which we see. Where we see two expressions separated by a symbol, they see a jumble of characters. And it doesn’t help that the % is not only used to mean modulo and format-substitution, but is also used in the format string syntax. I get it, the choice of % makes perfect sense to me as it invokes the printf-style format string syntax. But to a newbie, they see a % here and a % there and get confused, often calling it “modulo” when they mean something else.

- Terrible error messages. This is something that every programming language compiler or interpreter struggles with. Having good error messages is actually really difficult, and requires a lot of extra work on the parser. But if Python wants to be seen as a teaching language, then it is critical to make the messages clear and direct. One problem is that the error messages are dumped in a large block of difficult to read text. A bigger problem is that Python, like many other interpreters, spits out an error message for the point in the program where it finally gave up, instead of the point in the program where the error really lies. As a long-time programmer, I’m accustomed to dealing with poor quality error messages. I’ve written parsers and I know how they get lost in poorly formed programs. But for students just learning, it is really confusing and disheartening to read error messages which make little sense and don’t even point at the erroneous line of code.

- IDLE sucks. This one isn’t so much on Python, although it does ship with it. Unfortunately, if you are expecting a good introduction to the Python programming environment, it isn’t this. It’s barely stable, and on Mac OS X, it’s even worse. I have students who can’t change the font size in the editing window because IDLE crashes every time they try. IDLE gets stuck in all sorts of weird ways to the point where I just advise students to restart the program. I’ve had students attempt to save files with the extension .py and end up with .py.py — but when they attempted to compensate by not including the extension, then the file was saved without any extension.

- Significant whitespace. This one cuts both ways. At least, when the program is written correctly, it is easier for me to read. But I lost count of the number of times I look at a broken program and see a place where the student accidentally hit the spacebar and inserted an extra space somewhere. They can’t see it, because they haven’t spent decades obsessively scanning text to ensure that it lines up. Still, if it wasn’t whitespace, then it would probably be missing braces. This is an editor issue more so than a Python problem: IDLE should be better at showing significant whitespace visibly, and helping people put together blocks of code that are lined up properly.

- Dynamic typing. Being able to quickly assemble a running program with inconsistencies has the benefit of being able to run something that is half-broken. But that’s also the problem. And it loses newcomers, who are not often aware of the types of values they are dealing with because they are new to the concept of types. This gets worse when combined with operator overloading. Here’s an example: Python is flexible enough to permit you to make statements like for x in (1, 10): but the problem is that this usually happens when someone really intended for x in range(1, 10): so they get puzzled why their program isn’t counting from 1 to 9. This really pairs with the problem with operator overloading. The punning is cool and concise for people who know what’s going on, but pure confusion for people who don’t. And the dynamic types make it too easy to keep going without noticing that you have an error.

- Assignment statements. After teaching some of this class, I think that functional programming advocates have a point when they say that imperative programming is not natural. The idea that a variable can change its value over time is causing people to get mixed up. Maybe worse, is the syntax Python uses, a = b. To non-programmers, that’s a mathematical equality, which is a symmetric relation (even if they don’t use fancy words like that). To programmers, that means a is a variable given some sort of storage and b is where the value comes from. To non-programmers, they don’t see why it can’t work both ways: if a = 5*6 works, then why not 5*6 = a? And they have difficulty with the different meaning of the same name on either side of the equal sign, e.g. total = total + 1. Just because C uses this syntax doesn’t mean it’s a good idea. Using the Pascal-style := or the Haskell-style <- makes the asymmetrical nature of the operator much more clear.

- eval is evil. Although we don’t teach students to use eval, there are functions such as input which implicitly use eval on what they read from the user. This allows them to type in all sorts of nasty little things and not realize that it’s wrong. Anyone who’s dealt with languages with eval-like functionality knows what can happen. For example, I found one student thinking that they had to type pieces of their program into the input prompt. Since input just evaluates it, they actually got correct responses, so they did not realize they were doing it wrong. Arguably, we could simply not teach the students about input. But it seems like an awfully strange decision to make the most naturally named function into the one with the biggest pitfalls.

- Broken module system. Students get confused because Python looks for modules in the current directory. Suppose you write a program to send e-mails and you call it email.py and you import smtplib. Congratulations, you just broke your program. Because smtplib imports another module named “email” and thanks to the poor design of the Python module system, it winds up trying to load your own program again, instead of looking for the email module in the Python installation directory.

Language design is often about compromise, and a lot of heavy lifting to make it practical. Python has the community and software available to make it useful and worthwhile for many purposes. But I find it really frustrating when an ostensible “teaching language” can cause so many problems for beginners.

Discussion (0) | October 10th, 2012 Categories: hacking

SMP and cache coherency on Cortex-A9 MPCore

Last time I described in brief how to boot auxiliary processor cores on a Cortex-A9 MPCore Panda Board. I didn't go into too much depth about the synchronization mechanisms however. Turns out it is rather tricky to do synchronization in the intermediate states between no cache coherency and full cache coherency with hardware support. Originally I had been enabling onboard caches (actually, just L1 with separate I and D, for OMAP4460) on CPU0 prior to SMP initialization and attempting to bring up the auxiliary CPUs using a global variable for synchronization until they were ready to enable caches themselves. This led to all sorts of wacky problems with memory not appearing as it should. I tried various schemes of cache invalidation and clean-up before finally just disabling caches for the purpose of bootstrapping auxiliary CPUs.

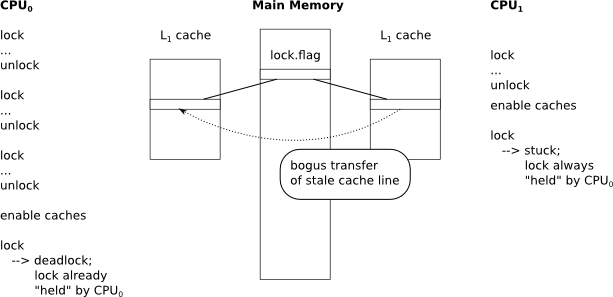

The trouble is, eventually those caches do have to get enabled. Now I had the auxiliary CPU enabling caches before CPU0 got around to doing it. This created another weird bug with the spinlock used to regulate the serial port debugging output. While CPU0 had caches disabled, it was working fine. But CPU1 had caches enabled and got stuck because it could never find a moment when the lock was considered "free." Then when CPU0 finally enabled caches, it ended up with a bogus cache line which claimed that CPU0 had already grabbed the lock. This triggered my deadlock detection code. My hypothesis is that as soon as CPU0 enabled its cache, it started receiving bogus cache line updates from CPU1 -- which was stuck in a world where CPU0 always had control over the lock. I decided to dodge the whole problem by simply forcing the secondary CPU to wait until the primary CPU had enabled caches, before attempting to use synchronization primitives.

With both CPUs having caching enabled, as well as the SCU, and the SMP bit, the spinlock implementation began to work correctly again, allowing both CPUs to spit out logging information over the serial port without trampling on each other.

Discussion (0) | August 17th, 2012 Categories: hacking

PandaBoard and MPCore

I've ported the new operating system to the PandaBoard-ES, which is a more advanced platform than the BeagleBoard. It is based on the TI OMAP4460 with a Cortex-A9 ARM dual-core processor. Although there were many similarities with the OMAP3530 of the BeagleBoard, many of the memory mapped registers were found at different addresses. Also, the OMAP4460 makes use of the ARM Generic Interrupt Controller v1.0 which is a much more capable controller than what the OMAP3530 had. This is necessary because with multiple cores, the interrupt controller needs to be able to distribute interrupts to one or more CPUs. The GIC architecture is pretty nice and straightforward for what it does, more so than the Intel APICs. There is a central distributor and multiple CPU interfaces, all of which are managed through memory-mapped registers. The distributor is capable of being programmed with individual IRQ target lists and priorities, and the system can be programmed in a fine-grained manner to determine which IRQs have sufficient priority to be able to preempt processors and which do not.

Booting the second CPU turned out, in the end, to be much more straightforward than booting an Intel application processor, but the documentation is spread around the OMAP TRM as well as the Cortex-A9 MPCore TRM and a little difficult to get into one coherent picture. To begin with, all auxiliary CPUs are put into a power-saving loop where they invoke the WFE (Wait-For-Event) instruction in a loop until certain conditions are satisfied. It is the job of the operating system on the primary processor to program the OMAP-specific AUX_CORE_BOOT_0 and AUX_CORE_BOOT_1 memory-mapped registers before executing the SEV (Set-Event) instruction which wakes up all waiting processors. The first register contains bitflags which must be set in order for the auxiliary CPU to break out of its loop -- I just set every bit to 1 in that register. The second register contains the address of the boot code for the secondary processor, which I have assigned to an assembly routine which takes control and sets up the stack before entering C code.

Once both processors are running C code, I have a several stage initialization routine based upon the MPCore TRM (Multiprocessor Bring-up), although it seemed fairly forgiving when I did things out of order. Using a global variable for rudimentary synchronization, once the secondary processor boots, I have the primary processor enable the Snoop Control Unit. Once that is done, then both processors go ahead and toggle the SMP bit in the Auxiliary Control register (ACTLR). Then I check the Snoop Control Unit's configuration to ensure that both processors are participating in SMP and coherency. Now the interrupt controller can be programmed to deliver interrupts to the second processor and the rest of initialization can proceed.

Discussion (0) | July 3rd, 2012 Categories: hacking

Tagged TLBs and Context Switching

ARM seems to be a pretty fast moving architecture. I've had to rethink my design a number of times because it turned out my technical documents were a little old. One of the new features that I am happy to see is the introduction of "tagged TLBs" in ARMv6 and above.

Context switching is an important mechanism in a modern, virtual memory operating system. Every process gets its own memory space, and that is defined by a set of page tables. Since traversing the page tables for every single memory access would be too slow, the traversals are cached in a special area of the processor known as the Translation Lookaside Buffer (TLB). This buffer makes modern virtual memory feasible. However, like all caches, it must be invalidated when the information inside it becomes incorrect. Otherwise, you might allow one process to access the memory of another process -- a safety and security violation.

An operating system switches between different processes hundreds or thousands of times each second. Each time it switches, it may need to change the working set of page tables. And every time that happens, the TLB must be invalidated. This adds up a heavy cost, as the TLB gets repopulated thousands of times per second. The amount of time it takes to flush the TLB and related caches is approximately 5 micro-seconds. This does not seem like much, but it is not the only cost. Subsequent memory operations are now adversely affected: they most likely must trigger hardware page traversal in order to translate the virtual address to a physical address. It is impossible to use a micro-benchmark to find the impact of TLB flushing on overall performance, as it depends on the specific application and its pattern of memory access. Whatever it is, the less flushing, the better.

Older ARM architectures added a feature to avoid context switching in some cases: The Fast Context-Switch Extension (FCSE). If you were willing to follow some constraints, then you could avoid TLB flushing. It basically worked by creating an array of memory spaces, and indexing them by a given "process ID". Every virtual address handled by the processor would be "modified" by adding 32MB multiplied by the process ID. Since ARMv6 this is deprecated, as it has been replaced with another option that is more widely used in industry: tagged TLBs.

With tagged TLBs, there is extra space in every TLB entry which stores an 8-bit "Address Space ID (ASID)". In addition, the page tables now store a bit for every last-level entry which specifies whether that entry is "global" or not. When the MMU misses on a "non-global" page table entry, the TLB stores the result of the hardware page-table walk along with the current ASID. Later, when you access that same virtual address again, the MMU looks up the entry in the TLB. If the current ASID matches the stored ASID, then it uses the cached entry. Otherwise, it "misses" and re-walks the page tables. In essence, this dodges the security and safety problems of not flushing the TLB by checking an ASID number whenever it looks up an entry in the TLB.

Now when you switch contexts, you change the current set of page tables, and the current ASID. This cuts down the immediate cost to about 500 nano-seconds, an order of magnitude. However, the effect on overall performance is more difficult to quantify. Since you don't wipe out the TLB, it is possible that the old entries are still there when you return to the original process. On the other hand, it is also possible that other programs have wiped them out and replaced the entries with their own. Either way, this should be better than the old way of doing things; at worst, all your entries were cleared.

I also found that ARMv7 has this feature permanently turned on. According to the ARMv6 technical manual, it was possible to toggle tagged TLBs. The trade-off was that the additional bits in the page table entries would take up the space formerly used by sub-page access permissions. Then I was reading through the Cortex-A8 technical manual and noticed that the bit was permanently set. Just another example of how things change quickly.

Another cute feature of ARM is the ability to access the hardware translation mechanism from software. Using CP15, c7, c8 and CP15, c7, c4, you can write a virtual address and read the result of the physical address translation, if successful. Without this feature, you must implement the walking of tables in software, and that involves either finding a virtual address from a physical address, or more likely, mapping the physical address temporarily. This is slow and has the annoying requirement of manipulating virtual memory structures that you may prefer to leave alone.

Discussion (0) | December 6th, 2011 Categories: hacking

Mystery bugs

I was working on part of my virtual memory management functionality when I decided to test what I had so far. The function was supposed to find and map a region of virtual memory by looking for and writing into a range of pagetable entries. It seemed to be working and generating the correct addresses. But when I tried to actually use the newly mapped page, it caused a "data abort" exception.

I hardcoded the address and attempted to isolate the problem. The strangest part seemed to be that the "data abort" exception would only occur if and only if I looked at the pagetable entry with some kind of read operation beforehand. If I blindly wrote the new entry into place, it was fine (which is good, because that is how I have been doing things up until now). But if I checked to see if I was using an available entry, it would cause the later code to crash. It would even crash if I examined only the neighboring pagetable entries. This kind of behavior "smells" like some kind of stale cache problem. But it didn't make any sense. After all, the cache should be largely transparent to the processor, besides affecting performance. I even tried flushing the entire cache, but to no avail.

But then while reading through the Cortex-A8 manual, in the chapter about the TTB register, I came across this little tidbit:

``Note: The processor cannot perform a translation table walk from L1 cache. Therefore, if C is set to 1, to ensure coherency, you must store translation tables in inner write-through memory. If you store the translation tables in an inner write-back memory region, you must clean the appropriate cache entries after modification so that the mechanism for the hardware translation table walks sees them.''

In other words, the MMU was skipping over the cache and going directly to memory. But by reading the pagetable entry, I was bringing the line into cache. And since it was configured using the efficient "write-back" option, the subsequent pagetable updates were not being written directly into main memory. I needed to clean and invalidate the cache line. But I thought I had tried that. Upon further investigate, it turns out, the Cortex-A8 does not seem to have the same "flush entire cache" operations that older manuals talk about. But if I insert a "clean and invalidate cache" instruction that applies to the pagetable entry's address, then everything starts working again.

A lot of systems code is about caches and management of caches, of all kinds. They make efficient operation possible. But coming from an x86 background, it never occurred to me that parts of the CPU might not be able to use the L1 cache (especially since the L1 data cache is physically indexed in the Cortex-A8). At least, not until I saw this strange behavior occur.

Discussion (0) | November 18th, 2011 Categories: hacking

The Dark Art of Linker Scripts

There are many ways in which writing operating system code is like writing ordinary application code: you still need libraries, runtime support, and you use many common algorithms. However, there are also many ways in which it is different: for example, you are responsible for providing the libraries and runtime support. Most importantly, a kernel does not have the luxury of taking a simplistic view of memory.

A typical application assumes a flat memory layout with standardized addresses for program entry, stack and heap spaces. That illusion is provided by the operating system through the mechanism known as Virtual Memory which is implemented in hardware by the MMU. The system compiler produces object files that have symbol entries, and the system linker combines them into a single image using a linker script. The linker writes the final addresses for every symbol in the program (for dynamic linking, this step is deferred until runtime). You can get a list of symbols and addresses on any unstripped image by using the "nm" tool. You'll notice that on ordinary applications, the addresses are pretty much all very similar from program to program. That is because there is a default system-wide linker script for regular programs. You can see it by typing "ld --verbose" but it is likely to be very long and very confusing. Linker scripts are what would now be termed a "domain specific language" with the sole purpose of describing the layout of sections and symbols in an output file, combining many inputs.

There are a myriad of options and features, mostly tacked on here and there to support different platforms, formats or architectures. I will focus on our barebones ARM image in the ubiquitous Executable and Linking Format (ELF). You can read all about ELF and its use in ordinary circumstances from links, I am going to focus on puppy's needs. When examining ELF files you want to be familiar with the tools "readelf" and "objdump" (and keep in mind that we are using the "arm-none-linux-gnueabi-" tool-chain). Let's run "arm-none-linux-gnueabi-readelf -l" on "puppy.elf":

Elf file type is EXEC (Executable file)

Entry point 0x80008000

There are 5 program headers, starting at offset 52

Program Headers:

Type Offset VirtAddr PhysAddr FileSiz MemSiz Flg Align

EXIDX 0x00c2c0 0xc000c2c0 0x8000c2c0 0x000f8 0x000f8 R 0x4

LOAD 0x008000 0x80008000 0x80008000 0x0017c 0x0017c R E 0x8000

LOAD 0x009000 0xc0009000 0x80009000 0x040d0 0x040d0 RWE 0x8000

LOAD 0x010000 0xc0010000 0x8000d0d0 0x00000 0x08000 RW 0x8000

GNU_STACK 0x000000 0x00000000 0x00000000 0x00000 0x00000 RWE 0x4

Section to Segment mapping:

Segment Sections...

00 .ARM.exidx

01 .startup .stubtext .stubARMidx

02 .text .rodata .ARM.extab .ARM.exidx .rodata.str1.4 .data

03 .bss

04

Program headers list "segments" which describe memory layout. Section headers describe "sections" which partition the image file itself. Not all sections are loaded into memory -- some may just contain metadata for debuggers and such. In addition, sections that appear consecutively on disk may be loaded into disparate memory locations. For example, although ".data" and ".bss" are neighbors in the file, they are loaded into different program segments. In fact, ".bss" is interesting because it takes up zero space on disk, but some amount of space in memory. That is because it consists of uninitialized space for global variables. If you look carefully, you can see how that is represented in the ELF header.

The most important aspect of the program header to note is that there is a difference between VirtAddr and PhysAddr. This is one of the distinguishing aspects of a kernel image vs an ordinary program image. A kernel has to deal with the real physical memory underlying the virtual memory abstraction. It also has to live inside the virtual memory world that it ends up creating. In other words, kernel symbols must be in two places at the same time. This trick can be pulled off by linkers because they understand that the memory addresses assigned to symbols may be completely unrelated to where the symbol ends up living inside the image. Doing this, however, requires work on your part: you must bootstrap the virtual memory system so that these symbol addresses are actually meaningful and not wild references off into unmapped areas.

In Puppy, the job of bootstrapping virtual memory is handled by the "stub". The stub is placed at the entry address. There is a small bit of assembler which sets up a temporary stack -- enough to run bare metal C code. The stub C code then does the necessary initialization of the memory hardware. This needs to be done in so-called "identity-mapped" space: where virtual and physical addresses are equivalent. Why? Because the moment you toggle on the MMU, the program counter is now working in virtual memory. The simplest way to keep the kernel running (and not worry about pipeline issues) is to make sure that the next instruction is the same regardless of whether you are addressing virtually or physically. Once the stub returns, the assembler sets up the permanent system stacks (in high virtual memory) and then jumps to the real kernel entry point, in high virtual memory.

Therefore, we lay out the sections for the stub according to this portion of the linker script:

ENTRY(_reset)

SECTIONS

{

. = 0x80008000;

_physical_start = .;

_stub_start = .;

/* The stub runs in identity-mapped space */

.startup : { init/startup.o (.text) }

.stubtext : { init/stub.o (.text) }

.stubARMtab : { init/stub.o (.ARM.extab) }

.stubARMidx : { init/stub.o (.ARM.exidx) }

_stub_end = ALIGN(0x1000);

_stub_len = _stub_end - _stub_start;

I have selected the starting address, in physical memory, to be the same as Linux. Keep in mind that on the Beagle Board (OMAP353x), there are only two banks of SDRAM, and the first one has physical addresses beginning at 0x80000000. This is a bit weird if you are coming from x86, where physical memory starts at 0, but it makes sense because many other addresses are used for memory mapped device registers. The linker script maintains a running counter of "virtual memory" addresses, and you can set it a value using a line such as ". = 0x80008000;". The primary job of the linker script is to create output sections and symbols with addresses derived from the counter. The output sections can mix and match input sections from the various object files. Here I have specified that the input section ".text" of "init/startup.o" should go into the output section named ".startup". Note that ".text" is a standardized section name which is used for executable code. ".stubtext" contains the C code. The stack unwinding information in ".stubARMtab"/".stubARMidx" is not really necessary for me, because I am not using C++ exceptions, but due to the vagaries of ARM linking, I must put this somewhere. I define a few convenient symbols for finding the starting and ending address of the stub, as well as its length.

The rest of the kernel is placed into high virtual memory. The reason for this is to leave the low virtual memory addresses for the use of ordinary programs. This is a pretty standard technique for OS kernels that use paged virtual memory. The main problem is that PhysAddr and VirtAddr can no longer be equivalent. That means we have to override the default. This can be accomplished with the "AT" option. It is also important that the physical addresses be kept as close together as possible. This is because the final image is not going to be ELF -- the bootloader doesn't understand it -- but rather a raw binary image. Any discontinuities between sections will be filled in with zeroes, resulting in a much larger image file than you probably intended.

. = 0xC0008000;

/* From now on, virtual and physical addresses are separate. */

. += _stub_len; /* skip stub code */

We need to skip over the stub in virtual memory. The reason is simply that we are mapping a 1MB region that begins at 0xC0000000 to the physical address 0x80000000. Therefore, the stub code is going to take up the page at 0xC0008000. It can be reclaimed later.

_kernel_start = .;

/* The virtual address is taken from the counter, but the physical

* address must be specified with AT. */

.text : AT ( _stub_end ) { *(.text) }

.rodata : AT ( LOADADDR (.text) + SIZEOF (.text) ) { *(.rodata) }

. = ALIGN(0x1000);

_kernel_readonly_end = .;

.data : AT ( LOADADDR (.text) + _kernel_readonly_end - _kernel_start ) { *(.data) }

.bss : AT ( LOADADDR (.data) + SIZEOF (.data) ) { *(.bss) }

. = ALIGN(0x1000);

. = . + 0x1000; /* 4kB of stack memory */

svc_stack_top = .;

Since _stub_end is a physical address, "AT (_stub_end)" should be fairly self explanatory. The subsequent sections are somewhat more difficult. Since we do not have the advantage of a physical memory counter, we must calculate the appropriate locations. Fortunately, we are given the LOADADDR and SIZEOF functions, which get the section PhysAddr and section length respectively. Using these tools we lay each output section consecutively in physical memory, combining the input sections from all object files (the ones not already used). Then we kick the counter by a page and make a symbol representing the supervisor stack top, which is used in startup assembler.

_kernel_pages = (_kernel_end - _kernel_start) / 0x1000;

_kernel_readonly_pages = (_kernel_readonly_end - _kernel_start) / 0x1000;

_kernel_readwrite_pages = (_kernel_end - _kernel_readonly_end) / 0x1000;

/* compute the physical address of the l1table */

_l1table_phys = l1table - ((_kernel_start - _stub_len) - _stub_start);

/* To make arm-none-linux-gnueabi-gcc shut up: */

__aeabi_unwind_cpp_pr0 = .;

__aeabi_unwind_cpp_pr1 = .;

}

Linker scripts let us define arbitrary symbols, so I add some useful metadata about the kernel itself. In addition, there is another exception-related workaround: the two symbols at the end aren't used by my code, but I can't convince GNUEABI GCC to stop emitting references to them. This makes the linker stop complaining about undefined symbols.

Let's check the output of "arm-none-linux-gnueabi-nm puppy.elf":

80008000 T _reset

...

c0009018 T c_entry

Looks good, for now. But this won't be the end of tweaking the linker script. I've already spotted some small details that I would like to fix. And you can do other interesting things with linker scripts, like creating sections for constructors, per-cpu variables, or other special purposes. And while there is formal documentation for LD, it isn't really much of a help -- not to mention that the implementation is not always flawless. I've had to be careful with the linker version I use, as some language features change as bugs are fixed or new ones are introduced. Since linker script is considered more of a configuration file format instead of a programming language, it doesn't get the same level of careful management that might be given to C compiler front-ends. And it doesn't help that the primary writers of linker scripts are also the developers of LD itself, who are intimately familiar with the inner workings of their interpreter.

Discussion (0) | November 15th, 2011 Categories: hacking

Virtual Memory, Interrupts and Timers

ARM has a fairly straightforward MMU design but with a couple of twists that are unfamiliar to me as a longtime x86 programmer. The page table structures are similar but different sizes -- the first level controls 1MB regions instead of 4MB. Also there is a 64kB option at the second level, but I am not going to bother with it, and stick to 4kB pages. One small oddity is that it is possible to set access permissions down to the 1kB level -- there are 4 AP fields per page table entry.

The major twist is the notion of "Domains" which add another layer of protection that is integrated with the MMU. Basically, there are up to 16 Domains that you can program. Each page entry is assigned into a domain. There is a central domain register which contains 2 bits per domain and controls the access to every page, on top of the existing page table access permissions. So conceivably, you could share a TTB (translation table base) between multiple tasks, and then use domains to protect one from the other. Kind of like segments, in a way, but not limited to contiguous regions.

I'm not sure yet whether it is worthwhile to do something like that. There is another hardware feature which makes use of domains to provide something called the "Fast Context Switch Extension." Basically it allows you to assign a hardware process ID and avoid TLB flushes during context switches. But there are some limitations, including: 32MB max virtual size and no more than 16 process IDs. I need to do some microbenchmarks before I determine whether to pursue this. To do that I will need to get interrupts and timers working.

The OMAP353x interrupt controller is pretty well described in the TRM. There are 96 lines, though one of them covers all "external interrupt sources" whatever that may end up being. There are two interrupt inputs to the ARM processor itself: FIQ and IRQ. Programming it is pretty straightforward to start. I've just set all the lines to go to IRQ for the time being. In the future, there are a bunch of interesting possibilities: priorities, preemption and FIQ support. For now, I just need a way to get the timer interrupt.

While looking at the GCC documentation for function attributes, I discovered that it knows about ARM interrupt handlers already. That means I don't have to write assembly veneers for the C functions, which saved me some trouble. The only major trouble I had was setting up the interrupt vector table. It's actually a piece of code: the ARM processor transfers control to some offset in the table and expects your code to do the right thing at that point. Usually the right thing is to load the PC with the address of the actual interrupt handler. In x86, I am accustomed to encoding the address of a far jump directly into the instruction. However, with ARM's 32-bit fixed-length instructions, this is basically impossible except for limited cases. ARM assemblers will actually create a "pool" of 32-bit constants that you use in your program, and then load them using PC-relative addressing. It's a little bit of extra "magic" that they do for convenience, but it is a surprise if you are not used to having assemblers insert extra data into your code. In this case, the surprise got me because when I copied the interrupt table into place, I forgot to copy the "pool" that went with it. This resulted in unexpected "prefetch aborts" which is ARM-inology for "Page fault caused by instruction fetch."

Getting timers to work took me on a bit of a runaround. Actually there are two subsystems that I am dealing with here: performance monitoring, and general-purpose timers. The perfmon system in Cortex-A8 (or ARM-v7) processors is controlled via coprocessor 15. Originally, I had gotten ahold of bad information off one of the manuals. Unfortunately, QEMU does not support perfmon. So I had to wait until I tried it on the real HW to discover that I was causing "undefined instruction" exceptions. Except that I was getting "data abort" exceptions instead, which puzzled me until I remembered that I had not yet set up a stack for "undefined instruction." The HW was telling me that the CP15 functionality I was invoking did not exist. I tracked down the correct information and plugged it in -- no more crashes, but no performance monitoring either. I was just trying to count the number of processor cycles that had passed, which is one of the built-in counter sources. Then I noticed that there was yet another register which mentioned "Enable Clock Counter" in its description. Strange, but it worked -- to enable the feature, I had to set it in two places.

GP Timers are a bit more involved. There are 11 of them, scattered throughout various "Power Domains" for some reason. Some have different capabilities than the others. Also there is a 32kHz timer register, which counts the number of ticks since reset. Convenient -- and I timed my perfmon counter using this, finding that approximately 720 million cycles pass per second. This corresponds to the advertised value of 720 "MHz" -- though it seems they are abusing the term "MHz" here to mean "Million Hertz" instead of "2^20 Hertz". It doesn't help that the 32kHz timer is actually kilohertz (32*2^10). Oh well.

The GP Timers can be programmed to raise an interrupt on overflow, or when they match a certain value. There are also lots of other little features I will need to check out at some point. The first thing I tested is the clock-rate for GP timer 1. I expected it to be 32kHz, which seems to be the default reset value according to the manual. However, I only discovered this after trying to figure out why it was ticking approximately 100 times faster than expected. After spending a long time in the TRM (these manuals do not make it easy!) I found a register called CM_CLKSEL_WKUP which has a bit controlling GP timer 1. It can be sourced from either the 32K_FCLK (default), or the SYS_CLK. I checked it, and on my system it was defaulting to SYS_CLK instead of 32K_FCLK. Since SYS_CLK is driving the processor, it is a lot faster, which explains the speedy tick rate. I do not know why it was not set at the stated default. But now I have a working, predictable timer.

All I need is a primitive context switching experiment and I can do the microbenchmarks I had set out to do.

Discussion (2) | November 14th, 2011 Categories: hacking

Beagle Board

Got my Beagle Board up and running. I purchased a 5V, 1.2a, 2.1mm, center-positive power supply such as this one. I am also using an AT-Everex style serial port header such as this one.

There is a wealth of information available for those interested in booting something like Angstrom Linux on their board. It is much harder to find information for barebones programming on the Beagle Board.

You can start with the Technical Reference Manual from the TI OMAP3530 product page. However it is extremely dense and difficult to understand.

I managed to find some useful information on the web in this blog and also this stackoverflow answer.

I distilled these sources down into a small demo, available at github: Puppy, a barebones Beagle Board example.

You can get a software emulator for Beagle Board by compiling the version of QEMU found here: http://meego.gitorious.org/qemu-maemo

I recommend using ./configure --target-list=arm-softmmu

Then there will be a -M beagle option available. You will need to assemble a NAND flash image for the -mtdblock parameter. There are some guidelines on the Internet for how to do this. Note that most of them offer an out-of-date u-boot.bin file for you to download. If you want to use the same version that is flashed onto the Beagle Board, then you need to go to http://gitorious.org/beagleboard-validation. Or you can download the binary from the Puppy git repository, which also has scripts to create the NAND flash image.

Note, if you are using the Code Sourcery GCC for GNU/Linux, then you will need to modify the u-boot source code: http://lists.denx.de/pipermail/u-boot/2010-May/071363.html.

Discussion (0) | October 25th, 2011 Categories: hacking