Introduction:

We address the problem of query-based video search in airborne data. Here the user specifies a query of interest and the examined data is then processed. Space-time video segments matching the user-query are generated. This problem is challenging due to high variability in routes and uncertain activity duration. Previous work relied on exploiting feature point tracks. Tracks however are sensitive to occlusion and low contrast. Such conditions are common in the examined airborne data. This often causes accumulation of tracking errors which leads to poor search results. Other approaches include topic modeling. This is however is computationally expensive.

Description:

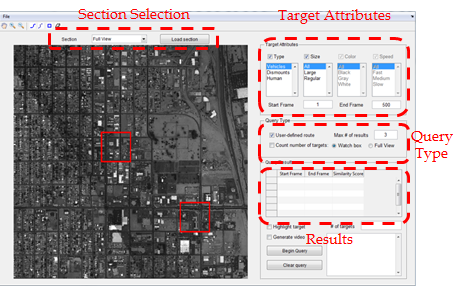

The video search algorithm demonstrated here consists of two steps: The first is extracting low level features and hashing them into a Hash-Table. This procedure enables efficient indexing on the video data while providing huge data reduction at the same time. The second stage extracts partial matches of the user-created query and assembles them into full matches using Dynamic Programming. This makes our approach robust to feature extraction errors and to inaccurate user-inputs.

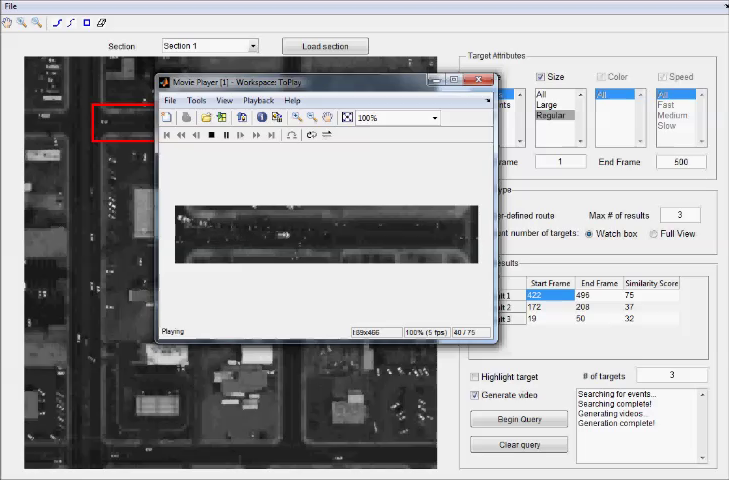

Three main types of queries are explored: Watch Box, Single Object Retrieval and Multiple Object Retrieval. Watch Box is the problem of counting the number of objects (e.g. vehicles) entering and exiting a specific region within some time frame. The second problem of Single Object Retrieval consists of finding the space-time locations of objects (e.g. vehicles) that are moving in a user-specified route. The last problem concentrates on queries containing sequential interactions between multiple objects, such as the dismount problem where a moving vehicle stops for a while and passengers get out.

Snapshot of search interface:

Results:

A snapshot of Watchbox query:

Video Demo:

Disclaimer: The actors in this video are fictional and the video has been simulated on a property not accessible to the general public.