Exploratory Video Search Using Low-Level Motion Features

Background:

Video surveillance videos are used for scene investigation purposes to gather evidence after events take place. Manual search of such large archives is both impractically time-consuming and tedious. This motivate the development of an automated technique for content-based video retrieval. This technique must be memory and run-time efficient to scale with large data sets, and be able to retrieve video segments matching user defined queries with robustness to common video problems, such as low frame-rate, low contrast and occlusion.

Description:

We address the problem of retrieving video segments which contain user-defined events of interest, with a focus on detecting objects moving in routes. This problem is challenging due to high variability in routes and uncertain activity duration. Current approaches use topic modeling and are usually computationally expensive.

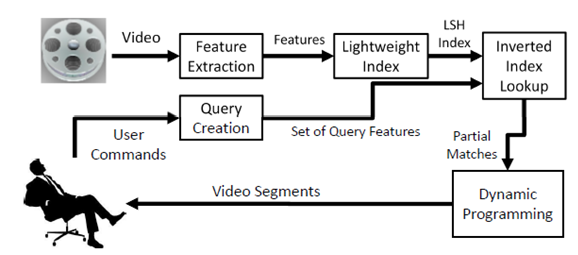

Our technique consists of two steps: The first extracts low level features and hashes them into an inverted index using locality-sensitive hashing (LSH). The second extracts partial matches to the user-created query and assembles them into full matches using Dynamic Programming (DP). DP exploits causality to assemble the indexed low level features into a video segment which matches the query route.

We examine CCTV and Airborne footage, whose low contrast makes motion extraction more difficult. We generate robust motion estimates for Airborne data using a track-lets generation algorithm while we use Hornand Schunck’s approach to generate motion estimates for CCTV.Our approach handles long routes, low contrasts and occlusion.We derive bounds on the rate of false positives and demonstrate the effectiveness of the approach for counting, motion pattern recognition and abandoned object applications.

Results:

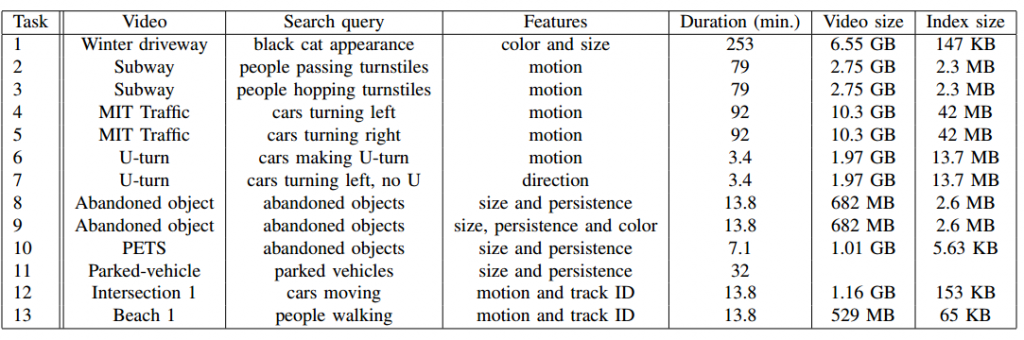

As shown in the following table, we tested different queries to recover moving objects based on their color, size, direction, activity, persistence and track-lets.

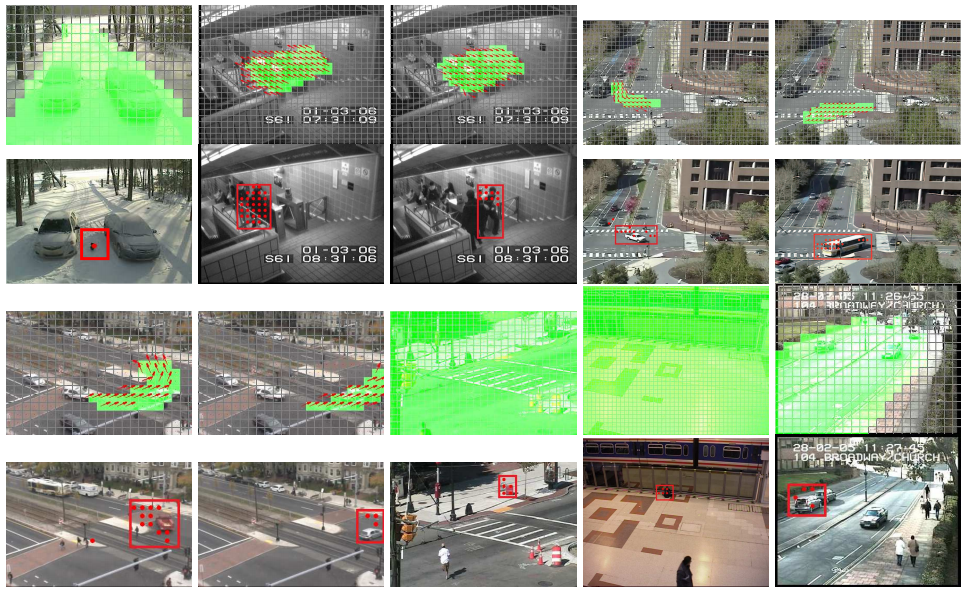

Here are some screen shots of the query tasks. These images show the search queries (with green ROI) and a retrieve frame (with a red rectangle).The red dots correspond to the tree whose profile fit the query.

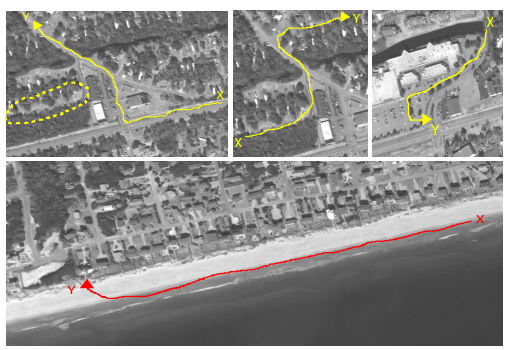

Examples for queried routes of airborne data: routes are shown in yellow/red arrows and they start from point X and end at point Y.Some of the routes undergo strong occlusion (see dashed yellow region, top row, first column) and others undergo many turns (see first row, last column).

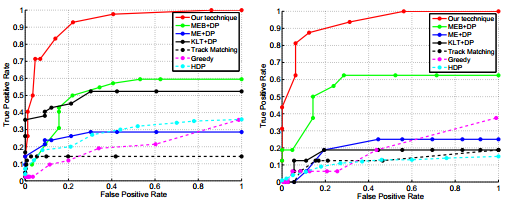

And the ROCs are shown as below:

Publications:

G. Castanon et. al., Exploratory Search of Long Surveillance Videos, (long paper), ACM Multimedia, 2012